Statistics is a strange beast.

As important as it is, I would guess that more than 90% of the people that need to do it have no formal training in it, and never will.

Probably fewer than 5% of people who do stats have a Bachelor’s or Master’s degree or a PhD in it.

And yes, 86.3% of all statistics are made up on the spot – I have no basis for these numbers, just my own observation and intuition.

Over the years I’ve often been asked by beginners where they should start, what they should do first, and which parts of statistics they should prioritise to get them to where they want to be (which is usually a higher paid job).

So I reached out to some of our statistics friends and asked them all the same question:

If you had to start statistics all over again, where would you start?

The answers were astounding - they turned out to be a roadmap of how to become a modern statistician from scratch.

In short, how to be a future statistician without ever needing a single lesson!

Some of the responses were also very humorous - I'm sure you'll enjoy reading them.

Let’s jump right in…

More...

Disclosure: This post contains affiliate links. This means that if you click one of the links and make a purchase we may receive a small commission at no extra cost to you. As an Amazon Associate we may earn an affiliate commission for purchases you make when using the links in this page.

You can find further details in our TCs

This Post Is Quite Long...

...so if you don't have the time to read it right now you might like to download it to read later - completely free!

Information Flows Through Data

When you mention statistics to beginners, the first things that usually come to mind are statistical tests, p-values and not understanding what they’re doing, why they’re doing it or what their results really mean.

And that’s most probably because statistics has historically been taught very poorly, from the perspective of probability distributions, the mathematics of statistical tests and the ability to look up p-values from printed statistical tables.

There is a new generation of statistics teachers that are doing things completely differently, though.

Take this contribution from Natalie Dean, Assistant Professor of Biostatistics at the University of Florida (Twitter: @nataliexdean):

“I guess more than the particular topic, I would think about the larger approach to learning. It is very easy for statistics to feel too abstract, and the simplified examples we teach in classes often don’t reflect how messy things are in the real world”.

She told me that in her classes she likes to emphasise teaching in context using examples from the literature:

“I present examples using a consistent format where I go through the key scientific question, the population, data structure, analysis, and results. Then, the statistical test is placed in a flow from start to finish, and the students can understand how the test results actually helped the researchers answer the question of interest”

Natalie Dean

She said that she tries to teach her students with the goals of helping them decide when these statistical methods should be used, how to decide what variables to put in, and how to interpret the results:

“My goal is to demonstrate that a big part of statistics is deciding what are your outcomes, how are you measuring them, what are your predictors, how are they measured. I like to remind people that someone had to decide on these things, and understanding how to make these decisions will be critically important for non-statisticians who want to run their own studies in the future”.

Hot Tip

Information flows through data.

You need to learn how to ‘read’ and understand that flow

Natalie’s contribution to this post really struck a chord with me because for years I’ve tried to get my students to understand that information flows through data, and you need to learn how to ‘read’ and understand that flow. The statistical toolbox helps you reach that information, but only the analyst can understand and interpret the information contained in the flow.

Pin it for later

Need to save this for later?

Pin it to your favourite board and you can get back to it when you're ready.

The ‘Why’ of Statistics is Like Detective Work

Jennifer Stirrup, author and founder of Data Relish (Twitter: @jenstirrup) told me that if she were to start statistics again, she would:

“Realize the ‘why’ of statistics: the power of statistics as a tool to ask better questions and make better decisions that could even change lives”.

Jen told me that she couldn’t see the point of stats when she was in high school, probably due to how it was taught, but that:

Jennifer Stirrup

“It was not until I did my undergraduate degree that I learned the ‘why’ of statistics and I could see, for the first time, that statistics is like detective work: it is part of decision making, insight discovery and it is just plain fun to find things out”

She continued:

“It helped build my confidence because I could offer more than opinions. I wish I’d had that light bulb moment earlier but I wonder how many people never find their light bulb moment. Statistics is a gift that we can give to others so pass it on!”

It’s inspirational to think of statistics as being like detective work and if only more teachers had Jen’s spirit, we’d have more people being interested in statistics – and more accurate results in the published literature!

Statistics is like detective work: it is part of decision making, insight discovery and it is just plain fun to find things out! #statistics #datascience #dataanalysis @chi2innovations @jenstirrup

That reminds me of an old colleague of mine that passed me his dataset and asked me to confirm his results. I was unable to do so, so I asked him why our results differed. He told me that “when I leave these 2 patients out of the analyses, the p-value becomes significant”. When I asked him why he left those particular patients out, he replied “because when I do the p-value is significant”. On enquiring what scientific justification there was for excluding these patients, he was unrepentant “I don’t have a reason yet, but I’ll find one!”.

His time would have been better spent considering this suggestion from RStudio’s Garrett Grolemund (Twitter: @StatGarrett):

“If I was teaching myself, I’d start with the basics of Philosophy of Science, then logic, i.e. how do induction, deduction, and abduction work?”

In other words, data comes before results, just as surely as cause precedes effect – something my former colleague had seemingly forgotten!

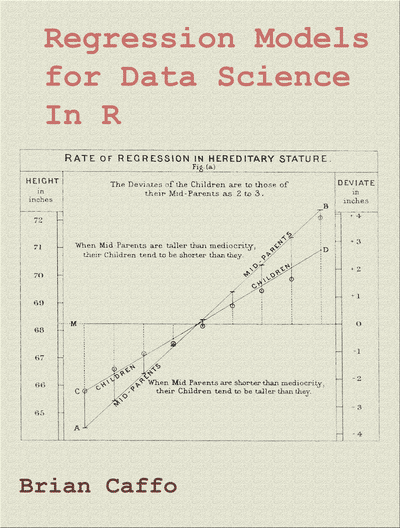

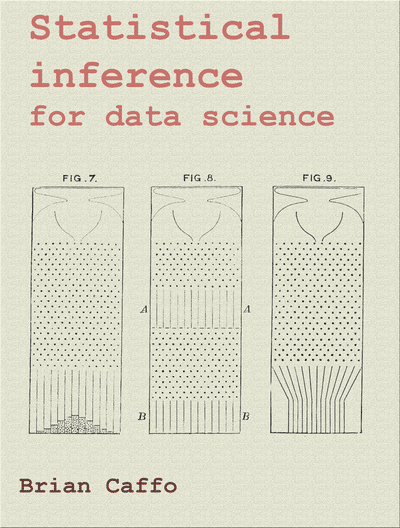

Garrett’s words (more from him later) were echoed by Brian Caffo (YouTube), professor of biostatistics at Johns Hopkins and author of numerous statistics books:

“If I had to start over with statistics, I would have emphasized mathematically oriented theories less and philosophical ideas of scientific conduct, inductive inference, causality, and likelihood more”.

Think Big and Communicate Well

Charles Wheelan, (Twitter @CharlesWheelan) author of Naked Statistics (and other such Naked books) told me that if he could start again he would:

“Start with the big questions statistics can answer, rather than with the math. For example, why do we know that smoking causes cancer? What does it mean if a student is one standard deviation above the mean on a standardized test? How can we possibly predict the outcome of an election with a sample of only a thousand voters?”

Charles tells me that it is easier to open the hood on the methodologies that underlie them once you understand the power of these findings and maintains that:

“To ask students to derive a bunch of coefficients before they appreciate the power of the discipline is an exercise in folly”

Charles Wheelan

Interestingly, Chelsea Parlett-Pelleriti (Twitter: @ChelseaParlett), the self-styled Chatistician has a different take on this:

“First, I would learn a few basic math concepts (matrix operations, basic integration, and logarithms come to mind) to help speed up the process of learning all the other concepts”.

“Second, I would focus on learning the generalized linear model framework instead of treating them as separate entities. I think it's easier to think of t-test, regression, ANOVA, logistic regression, etc., as variations of one model rather than separate models”.

“Third, I would spend time learning how to communicate well, both with experts and new learners. A huge part of my work is explaining to others what I do through visuals and words, so having a solid foundation of communication skills will help immensely”.

Hot Tip

Spend time learning how to communicate well - having a solid foundation of communication skills will help immensely

I think if we were to agglomerate Charles and Chelsea into a single being (a Charlsea?!??) we could find common ground on this – start with the big questions of statistics, then dive into basic mathematical concepts, followed by the GLM, and infuse it with a large dose of ‘ability to explain to others’ throughout.

Talking about the ability to explain to others, you would do well to listen to the advice of Julia Stewart Lowndes, marine data scientist at the National Center for Ecological Analysis and Synthesis at UCSB (Twitter: @juliesquid), who tells me that if she had to start all over again she would:

“Start with empathy and storytelling to set up motivations behind statistics and code to bring practices to life”.

She also tells me that she would start with Teacup Giraffes, a website dedicated to teaching statistics and the R programming language to students, scientists and stats-enthusiasts.

Thanks to Julia, my 10 year-old daughter has just had her first steps in programming – and loved it!

Understand The Problem Domain

Several years ago when I was a medical statistician I was deep into some analyses and a particular result jumped out of the screen and slapped me on the face like a wet kipper.

“My God, the chemotherapy is killing the patients!” I exclaimed out loud.

What I’d found was that breast cancer patients that were receiving chemotherapy were more likely to die than those who didn’t receive it. This was startling, and I felt like running through the hospital shouting “stop the chemo, stop the chemo!”.

Fortunately, I took a deep breath and thought about it for a moment before realising my rookie mistake. You see, the patients with the more severe disease needed more aggressive treatment, so were given chemotherapy. Those with a milder form of breast cancer didn’t receive the chemo because they didn’t need it. The chemotherapy wasn’t killing the patients – the severity of the disease was!

*Doh* (hand slaps forehead).

Start with the big questions statistics can answer, rather than with the math #statistics #datascience #dataanalysis @chi2innovations @CharlesWheelan

And this is why it’s incredibly important to understand the problem domain, something Dez Blanchfield of Sociaall.com knows only too well (Twitter: @dez_blanchfield):

“A professor I studied under once told me ‘don’t write the first line of code, until you can describe the problem you are trying to solve in plain English, sufficiently clearly such that it can be easily understood by anyone who read it’. This was probably one of the simplest and most powerful moments in my life”.

So what would Dez do if he had to go back to the beginning?

Dez Blanchfield

“I would invest as much if not more time in understanding the problems I was trying to solve and who I was solving them for”

He told me that he believes that while the core foundations of statistics are always going to be critical, the domain knowledge is just as important if not more important.

“It is often easier to take a domain expert and teach them how to apply statistical methods to their domain than it is to take a general expert in statistics and teach them to be a domain expert to drive outcomes”.

And this is something that I whole-heartedly endorse. I started out as a medical physicist, then transitioned (via a long and tortuous route) into being a medical statistician, and if I hadn’t had the domain expertise first, I would have made a complete fool of myself.

While it’s not uncommon for me to make a fool of myself (I do it quite often, actually), doing it in front of my boss and fellow researchers was never going to be a good career move!

Data Collection & Data Cleaning

Rachael Tatman of Rasa (Twitter: @rctatman) advises that the first place anyone should start with statistics is in data collection:

“For someone learning statistics with an eye to going into data science, I actually think a really great place to start is data collection rather than stats theory”.

“This gives you a lot of empathy and understanding for whoever is actually getting the data in the first place, as well as helps to build your intuitions about what data points actually mean and what is likely to go wrong. This will give you a big head start when it comes to data cleaning”

Rachael Tatman

This is very good advice indeed.

I have always insisted (and still do) that the key to efficient data cleaning lies in good data collection practices, and the two go hand in hand. Get your data collection wrong and you’re in a world of hurt when it’s time to clean your dataset.

Rachael also makes a really great point about the intuition of the analyst, and I’ve written about this in a previous post, 50 Shades of Grey – The Psychology of a Data Scientist, where I discuss this data intuition and how to get it – and how long it will take you!

Descriptive Statistics & Data Visualisation

When I was a young pup just doing my PhD I made a huge mistake. I had done all my research (building a predictive artificial neural network) and it had gone well, and now all I had to do was write it up and ride off into the sunset.

Or so I thought.

My supervisor had other ideas, though, so he dropped the bomb on me – he asked me where my descriptive statistics chapter was that showed that my data were appropriate and fit-for-purpose.

Hot Tip

Descriptive statistics ARE serious statistics, and should always be your first port of call

I hadn’t checked my dataset at all, and when I did I found out it was riddled with errors, biases and all sorts of problems. I had to scrap it and start my data extraction all over again.

That’s a story for another day, but the point here is that descriptive statistics should never be an after-thought (which they were for me), they should be done before you start doing any ‘serious’ statistics.

No, scrap that – descriptive statistics ARE serious statistics, and should always be your first port of call.

I learnt this the hard way, and these next statisticians are in agreement.

Like Michael Friendly, author and professor of Psychology at York University in Toronto (Twitter: @datavisFriendly), who would start life as a statistician by doing exactly what he had done all those years ago – he would audit John Tukey’s undergrad course that he was teaching as he wrote his Exploratory Data Analysis book:

Michael Friendly

“It taught me that statistics was much more than cranking out test statistics – it was about insight into problems, often better achieved with graphical methods rather than tables or p-values”

He goes on to advise beginners in statistics to:

Alberto Cairo, a data visualisation specialist at the University of Miami (Twitter: @AlbertoCairo) goes back even further, to before John Tukey to say that:

“I wish more statisticians before John Tukey or Tufte would have taken visualization seriously, not as a way to design pretty pictures, but as serious and rigorous analytical tools. According to Michael Friendly in this article, the first half of the 20th Century was a dark age for statistical graphics, and graphics only made a return towards the end of that century”.

This sentiment was echoed by Matt Harrison, author and Pythonista (Twitter: @__mharrison__), who told me that:

“I would start with the basics. When analyzing a new data set I always come back to summary statistics and simple visualizations like histograms and scatter plots. These foundational tools will take you far”.

This is sage advice. Doing summary statistics and simple visualisations will get you 80% of the way to the answers you’re seeking, in only 20% of the time.

Descriptive statistics and visualisations will get you 80% of the way to the answers you’re seeking, in only 20% of the time #statistics #datascience #dataanalysis @chi2innovations

And Danielle Navarro, an Associate Professor at the University of New South Wales (Twitter: @djnavarro) researching statistical methods in behavioural sciences certainly agrees with this, telling me that:

“The undergraduate classes I took focused almost exclusively on inferential statistics, but in my experience statistical inference is the last and smallest part of scientific data analysis. Careful and thoughtful description is harder than it looks and should always precede any attempt to perform inference. So if I had to start over, I would probably focus on data visualisation and exploratory data analysis first”.

To me, this is quite interesting in that as a medical statistician inferential statistics has been the branch of stats that I have used the most. To hear that in other disciplines it is hardly used at all is surprising. It just goes to show though, that learning how to understand and communicate the data is far more important than simply learning a bunch of statistical tests (as useful as that may be).

Hot Tip

Data visualization is not only a great tool to communicate, but is also a great way to understand technical details

“If I had to start statistics all over again, I would start with visualization” was the response I got from Jonathan Frye, data scientist and founder of APAE Ventures (Twitter: @JonathanAFrye). He went on:

“Data visualization is not only a great tool to communicate, but is also a great way to understand technical details. Additionally, visualization enables the application of statistics in many areas, so for students interested in sports or those interested in fashion trends, both can start with visualization in their respective interests”.

And this is a really important point – while certain statistical tests and tools may be confined to specific sectors or types of analysis, descriptive statistics and data visualisations are sector- and data-agnostic. You can use them universally to understand your data and communicate your results to others, even if you haven’t done some of the more ‘sexy’ stats (although you should probably do these too).

Statistical Theory vs Applied Statistics

There is a schism in statistics. On the one hand, there are those who have had a formal education in statistical theory, and on the other, those who have learnt by doing. If you’re like me, you’ll be a completely self-taught statistician who looks longingly at the luscious green grass on the other side, wishing that I’d been taught properly so I don’t make so many foolish mistakes.

But what do other statisticians think about this?

Well, Jacqueline Nolis and I shared the same path, but she doesn’t feel the same way as I do. Jacqueline (Twitter: @skyetetra), a data science consultant and one of the authors of the book Build a Career in Data Science, told me that she’d never had a formal statistics education and instead learned everything she needed on the job:

“If I had to start over I’d do the exact same thing I did the first time! My background was in applied mathematics, and so I only took one statistics course in academia. An on the job education in statistics has worked great for me and the people I know with more rigorous statistics backgrounds don’t seem to use much of what they learned. Any time I’ve need something like an unusual statistical method I have been able to read up and learn it on my own. The kind of more broad rational thinking about data you need as a data scientist can come from many fields beyond just statistics. For me it was math but I’ve seen many people get it from many backgrounds.”

“I’m very happy with the career I’ve achieved from my limited statistics education – if I started over again I’d be afraid of stepping on a statistics butterfly and changing the timeline so that I end up a UX designer or something”

Jacqueline Nolis

On the other hand, you have Kristen Kehrer of Data Moves Me (Twitter: @DataMovesHer), who had a formal statistics education. She told me:

“Most of my study in undergraduate probability and statistics was very theoretical. If I had to begin again I would have taken a more applied stats course in my undergraduate degree. But even if I was doing it all over, I wouldn’t change my decision to pursue a formal degree in the topic”.

Interestingly, Lisa-Christina Winter, senior product researcher at Chatroulette (Twitter: @lisachwinter), suggested to me exactly the opposite of this:

“I would start out with statistical theory – by understanding basic concepts and why they’re important. To put it into a digestible frame, I’d look at the theory in the context of simple experimental designs”.

So why were the theoretical foundations of statistics important to you?

“Although I didn’t appreciate it at the time when I first learned statistics, I now see how important it was to solve statistical issues manually, by using formula books and distribution tables. When working with someone now, it becomes very clear very quickly that a deeper statistical understanding is super important”.

How so?

“Going through a lot of theoretical stats prior to getting busy on applied stats has kept me away from making loads of mistakes that I would never have been aware of by simply writing syntax”.

Want to learn statistics? Do as many projects as possible – building products is how you learn #statistics #datascience #dataanalysis @chi2innovations @mdancho84

And Matt Dancho, who creates data science courses for business students (Twitter: @mdancho84) has some advice to share about learning statistics, he told me:

“I would do as many projects as possible – building products is how you learn. As you run into errors, troubleshoot, create, learn. This is a directly transferable skill to your business”.

He also has a message for all those that tell us to learn how to multitask (I’m sure you all know a University lecturer that’s told you to learn this):

“I would focus on one learning goal – it’s easy to get distracted. This costs you years. Rather, focus on one project or one learning objective. Not every new technology that you hear about. That will kill your productivity. Focus is SUPER CRITICAL to learning”.

Mine Çetinkaya-Rundel of the OpenIntro team (Twitter: @minebocek) also suggests going down the applied stats route:

“I started learning statistics with a traditional introductory statistics course that had us memorise some formulas but not really touch the data. It took me a while after that first course to put the pieces together and understand (and fall in love with!) the entire data analysis cycle”.

Hot Tip

Focus on how to ask the right questions and how to start looking for answers to these questions in real, complex datasets

So what would she do if she had to start stats all over again?

“If I were to start over, I would love to start learning statistics where I can work with the data, doing hands on data analysis (with R!) and also focus on how to ask the right questions and how to start looking for answers to these questions in real, complex datasets”.

And in part 2 of 3 of his advice to statistical newbies, Garrett Grolemund (see, I told you we’d hear from him again, didn’t I?) said that if he had the chance to start statistics again:

“I’d think hard about what randomness is really. Statistics is the applied version of this stuff, but we jump straight to the math/computation too quickly”.

So there we have it. 9 out of 10 cats statisticians prefer applied statistics! So the next time you’re feeling sorry for yourself analysing data without having had the theoretical background, just remember that you’re following the path that many formally trained statisticians would go down if they had their time again. And if it’s good enough for them, well, you know the rest…

Statistics – The Big Picture

Over the years I’ve been asked many times how this stat fits with that, and I’ve found it difficult to answer because the student doesn’t know where either of them fit into the big picture. I wanted to show them the big picture, but I couldn’t find an image that showed all of statistics in one neat diagram.

So I created it myself, and this is my contribution to the post.

Lee Baker (that’s me), author, data scientist and all-round good egg, told me (that’s also me) that:

“If I had to start statistics all over again I would find out how everything in stats fits together so that I understand the whole landscape”

Lee Baker

“My statistics experience has been ‘learn this bit here’, then ‘learn that bit there’ without understanding how these parts relate to each other. It’s only after I created Statistics – The Big Picture that I really understood how to chart a path from probability to experimental design, through data collection and cleaning to data analysis, inferential statistics and on to more specialised topics. I really wished that someone had given me this on day one so that I had a map of where to go!”

And now, for all those of you that, like me, wished that you understood more about how everything in statistics fits together you can get your own copy of Statistics – The Big Picture, completely free.

Statistics - The Big Picture

The ONLY place to download your FREE Ultra-HD Hi-Def copy of Statistics - The Big Picture

Frequentist Statistics vs Bayesian Statistics

I mentioned earlier that there is a schism in statistics between those that had a theoretical basis is stats and those that were more applied. Well, there is another much bigger schism in stats, and that is between the frequentists and the Bayesians.

Let’s see what the statisticians have to say about this debate.

We start with Kirk Borne (Twitter: @KirkDBorne), astrophysicist and rocket scientist (well, rocket data scientist). Surprisingly, he tells me he's never never had any interest in being an astronaut!

“I am not a statistician, nor have I ever had a single course in statistics, though I did teach it at a university. How’s that possible?”

Funnily enough, that was the same for me! So where did he get all his stats from?

“I learned basic statistics in undergraduate physics and then I learned more in graduate school and beyond while doing data analysis as an astrophysicist for many years. I then learned more stats when I started exploring data mining, statistical learning, and machine learning about 22 years ago. I have not stopped learning statistics ever since then”.

This is starting to sound eerily like my stats education. All you need to do is drop the ‘astro’ from astrophysics and they’re identical! So what does he think of starting stats all over again?

Kirk Borne

“I would have started with Bayesian inference instead of devoting all of my early years to simple descriptive data analysis. That would have led me to statistical learning and machine learning much earlier”

He continued:

“And I would have learned to explore and exploit the wonders and powers of Bayesian networks much sooner”.

This is also what Frank Harrell, author and professor of biostatistics at Vanderbilt University School of Medicine at Nashville thinks about hitting the reset button on statistics (Twitter: @f2harrell). He told me:

“I would start with Bayesian statistics and thoroughly learn that before learning anything about sampling distributions or hypothesis tests”.

And Lillian Pierson, CEO of Data-Mania (Twitter: @Strategy_Gal) also mentioned Bayesian statistics when I asked her where she would start:

“If I had to start statistics all over again, I’d start by tackling 3 basics: t-test, Bayesian probability & Pearson correlation”.

New to statistics? Then learn these 3 things: (1) Bayesian stats, (2) Bayesian stats, and (3) Bayesian stats! #statistics #bayesian #bayesianstats @chi2innovations @KirkDBorne @f2harrell @Strategy_Gal

Personally, I haven’t done very much Bayesian stats, and it’s one of my biggest regrets in statistics. I can see the potential in doing things the Bayesian way, but as I’ve never had a teacher or a mentor I’ve never really found a way in.

Maybe one day I will – but until then I will continue to pass on the messages from the statisticians in here.

Repeat after me:

Learn Bayesian stats.

Learn Bayesian stats.

LEARN BAYESIAN STATS!

Statistical Recipes vs Calculus vs Simulated Statistics

As I was reaching out and gathering quotations I got a rather cryptic response from Josh Wills (Twitter: @josh_wills), software engineer at Slack and founder of the Apache Crunch project (he also describes himself as an ‘ex-statistician’):

“Computation before calculus is the pithy answer”, he told me.

This intrigued me, so I asked him if he could elaborate a little, and here is his reply:

“So I think stats can be and is taught in three ways:

“Most folks do the recipes approach, which doesn’t really help with understanding stuff but is what you do when you don’t know calculus”.

Ah, I understand the ‘set of recipes approach’, but I didn’t know anyone was still doing the calculus approach. He went further:

“I was a math major, so I did the calculus based approach, because that’s what you did back in the day. You mostly do some integrals with a head nod to computational techniques for distributions that are too hard to do via integrals. But the computational approach, even though it was discovered last, is actually the right and good way to teach stats”.

“The computational approach (simulated statistics), even though it was discovered last, is actually the right and good way to teach stats”

Josh Wills

Whew, thank God for that – I thought he was saying that we should all learn the calculus approach!

“The computational approach can be made accessible to folks who don’t know calculus, and it’s actually most of what you use in the hard parts of real world statistics problems anyway. The calculus approach is historically interesting, but (and I feel heretical for saying this) it should be relegated to a later course on the history of statistical thought – not part of the intro sequence”.

It’s interesting to see the evolution of statistics in this light and shows just how far we’ve come – and in particular how much computers and computing power have developed over the past couple of decades.

It’s truly mind-blowing to think that when I was doing my PhD 20 years ago it was difficult getting hold of data, and when you did get some, you had to network computers together to get enough computing power. Now we’re all swimming in data and err, well, we still struggle to get enough computing power to do what we want – but it’s still way more than we used to have!

Simulated Statistics is the New Black

I also got a really interesting perspective from Cassie Kozyrkov, Head of Decision Intelligence at Google (Twitter: @quaesita), who told me that she’d:

“Probably enjoy making a bonfire out of printed statistical tables!”

Well, amen to that, but seriously though, where would you start again with stats?

“Simulation! If I had to start all over again, I’d want to start with a simulation-based approach to statistics”.

OK, I’m with you, but why specifically simulation?

“The ‘traditional’ approach taught in most STAT101 classes was developed in the days before computers and is unnecessarily reliant on restrictive assumptions that cram statistical questions into formats you can tackle analytically with common distributions and those nasty obsolete printed tables”.

Hot Tip

With simulations you can go back to first principles and discover the real magic of statistics

Got you. So what exactly have you got against the printed tables?

“Well, I often wonder whether traditional courses do more harm than good, since I keep seeing their survivors making ‘Type III errors’ – correctly answering the wrong convenient questions. With simulation, you can go back to first principles and discover the real magic of statistics”.

Statistics has magic?

“Sure it does! My favorite part is that learning statistics with simulation forces you to confront the role that your assumptions play. After all, in statistics, your assumptions are at least as important as your data, if not more so”.

Cassie Kozyrkov

“Learning statistics with simulation forces you to confront the role that your assumptions play. After all, in statistics, your assumptions are at least as important as your data, if not more so”

And when it came to offering his advice, Gregory Piatetsky, founder of KDnuggets (Twitter: @kdnuggets), suggested that:

“I would start with Leo Breiman’s paper on Two Cultures, plus I would study Bayesian inferencing”.

If you haven’t read that paper (which is open access), Leo Breiman lays out the case for algorithmic modelling, where statistics are simulated as a black box model rather than following a prescribed statistical model.

This is what Cassie was getting at – statistical models rarely fit real-world data, and we are left to either try to shoe-horn the data into the model (getting the right answer to the wrong question) or switch it up and do something completely different – simulations!

Sampling, Resampling and Re-Resampling

And if you’re going to run simulations, then you’re going to need to know how to sample and resample data. Re-enter our old friend, Garrett Grolemund, who, in his final piece of sage advice told me that:

“If I had to jump straight to teaching statistics I’d begin with resampling and stick with it for as long as I could: start with resampling and use it to demonstrate sample variation, sample bias, the bootstrap, cross-validation, hypothesis tests”.

“If I had to jump straight to teaching statistics I’d begin with resampling and stick with it for as long as I could”

Garrett Grolemund

And talking of simulations, James D. Long, a New York quant risk manager and co-author of the R Cookbook (Twitter: @CMastication), gave me this observation via the Twittersphere:

“I’d start with simulation. Build up intuition of sample size from that. Build t-tests from first principal simulations, I think. Not sure that would work, but would like to try it”.

This kicked off a discussion in which Diana Thomas, professor of mathematics at West Point Military Academy (Twitter: @MathArmy) said that “we are teaching statistics like this at West Point and it’s wonderful!”. In turn, Mike O’Connor (Twitter: @mtoconnor3) replied that “this is kind of how @rlmcelreath approaches things in “Rethinking” and it really helped me with some of the trickier concepts” (referring to Richard McElreath, author of Statistical Rethinking, a book about Bayesian statistics).

Statistical Simulations Save the Environment – and Your Sanity!

So what kind of simulations are we talking about here? And what use are they in the real world?

Well, Ganapathi Pulipaka, chief data scientist at Accenture (Twitter: @gp_pulipaka) relayed to me the story of how a supercomputer helped prevent nuclear weapons testing by using statistical simulations. Ganapathi told me that:

Ganapathi Pulipaka

“The best part of statistics is to create simulations, where there is not always a single mathematical approach available”

OK, so tell me more about the need for simulations…

“A few decades ago, worldwide nuclear warhead programmes used to destroy islands and make holes with nuclear bomb explosions. Just a couple of years ago, a supercomputer broke the world record for bomb simulations of nuclear weapons with the use of statistics and mathematics”.

And the real-world use?

“Scientists don’t turn islands into holes anymore by exploding bombs before they first simulate the trillions of particles and rewind through their trajectories to understand the energetic characteristics. The supercomputer created a trillion files in under two minutes, which is a world record for this type of simulation”.

So if you want to understand the behaviour of nuclear bombs in certain situations there is no longer any need to set off hundreds of nuclear bombs to see what happens. These days we have the capability of testing in the virtual world rather than the real world. That’s quite useful for the environment – and our collective sanity too!

That reminds me of some research I was doing way back in 2000 whilst studying for my Master’s Degree in Medical Physics.

There was this idea that a small-scale PET scanner* might be able to detect metastatic cancer in axillary (in the armpit) lymph nodes more accurately that the existing full size PET detectors.

*PET: Positron Emission Tomography – it has nothing to do with your dog, unless you want to scan your pet with a PET!

The problem was that small-scale PET detectors were only in development and were incredibly expensive, so we decided to create a statistical simulation to test our hypothesis.

My first job was to build a simulation of the torso of a human being. To do this I used the Visible Human Project to create an anatomically correct and lifelike virtual representation of a human being. If you don’t know what the VHP is, I suggest you check it out – it’s equally cool, fascinating and gruesome all at the same time!

The next job was to create virtual representations of a fully-sized PET scanner (this was the control) and a variety of small-scale PET scanners – ring-shaped, a flat-plate arrangement, a curved-plate arrangement and many more.

So now we had a virtual cancer patient and a variety of virtual PET detectors, we could run simulations – millions of them. We ‘spiked’ virtual lymph nodes with various amounts of virtual radioactivity (positron emitters) and ran Monte Carlo simulations with each of the virtual detector arrangements.

The simulations took a huge amount of computing power and we had to run simulations overnight by linking together computers in the lab. Each morning we had a new set of results to inspect, then we would adjust and go again the next evening.

This was a cool time – statistical simulations like this weren’t mainstream at the time, and the term ‘Data Science’ was almost another decade away from being used widely.

So which detector arrangement was the winner?

Well, actually, the results told us that none of the small-scale detectors were more accurate than the existing full-size PET scanner for these purposes, and as a consequence we cancelled the research programme – saving millions of pounds on unnecessary research!

So you see, sometimes a null result can be a beautiful thing!

Simulations for Imperfect Data

Finally, and last, but not least, David Diez, senior quantitative analyst at YouTube and co-founder of OpenIntro told me that if he was to start statistics all over again he would:

David Diez

“Code simulations to check how shifts in data and populations impact statistical methods. No data set is perfect, and gaining intuition for how methods react to imperfect data is invaluable”

Summary

Well, what a journey this has turned out to be!

When I reached out to my statistical friends, neighbours and countrymen for a small quotation on what they would do if they were to hit the reset button on their statistical careers, I never expected I’d get such great comments – and so many of them too!

I thought it might end up as a long list of statistical tests for newbies to work through, but no, there’s an entire road-map to becoming a future statistician for anyone just starting out – and without attending a single lesson!

"Want to be a future statistician? There's an entire statistics road-map for stats newbies right here" #statistics #datascience #dataanalysis @chi2innovations

To recap, we had Natalie Dean teaching us that we need to learn how to read the flow of information through the data to answer the question of interest. Then Jen Stirrup likened statistics to ‘Detective Work’.

That’s just inspirational!

Then Dez Blanchfield taught us that understanding the problem domain is every bit as important as the statistics themselves, and Rachel Tatman taught us that data collection and cleaning are critical first steps when you get started working with data, helping you to build an intuition about your data and what is likely to go wrong.

After this, Michael Friendly urged us to learn statistics from the perspective of data visualisation and focus on understanding phenomena visually, rather than through test statistics.

And then we had a whole bunch of statisticians espousing the virtues of applied statistics over a theoretical background – none more so than Jacqueline Nolis, who didn’t have a formal statistics background, but wouldn’t change a thing!

By the way, did you remember to get your free download of Statistics – The Big Picture, a poster that shows you how everything in statistics fits together? If you forgot, you can get it below.

Following on, we had rocket man Kirk Borne encouraging us to blast off into Bayesian stats to explore strange new worlds… Hold on, that sounds vaguely familiar somehow…

And then came a stellar moment (OK, I’ll stop with the space gags now) from Josh Wills when he laid out how we went from a choice of statistical recipes or calculus to the new Holy Grail – simulated statistics!

Then Cassie Kozyrkov went and burnt all the statistical tables.

Bloody vandal!

She did it all for a good cause, though, to make us realise that the old ways led us to reach the wrong conclusions because we were asking the questions that we could answer rather than the questions that we really needed to answer.

The light bulb just went on!

And to round it all off, David Diez reminded us that data are imperfect and we can use simulations to check how shifts in data impact statistical methods.

Wow.

Mind. Blown!

Statistics - The Big Picture

The ONLY place to download your FREE Ultra-HD Hi-Def copy of Statistics - The Big Picture

Après Ski

I’ll round this off now by a contribution from Christian Guttmann, executive director of the Nordic Artificial Intelligence Institute (Twitter: @ChrisXtg), whose mind was firmly on the next steps one might take after statistics when he told me that:

“Statistical thinking and methods are at the basis of many machine learning approaches – and learning statistical roots are an essential toolkit in the era of Artificial Intelligence”.

For me, a newbie could do far worse than following the advice of the statisticians in this blog post!

And the final words go to Gil Press (Twitter: @GilPress) of What’s The Big Data?, who said:

“For my part, I don’t see any reason to start statistics all over again!”

Gil Press

I'll drink to that!

Comment

Does anything in this post strike a chord with you?

Do you agree or disagree with anything that any of these experts say?

If so, please leave your comments below - we would love to hear your thoughts!

And don't forget to share the post with your friends in your favourite social media outlet using the share buttons to the left...